Chatbots often seem very understanding. They remember what you said before, never judge you, and are always available. These qualities make chatbots appealing, but they can also create mental health risks, especially for people who are more vulnerable.

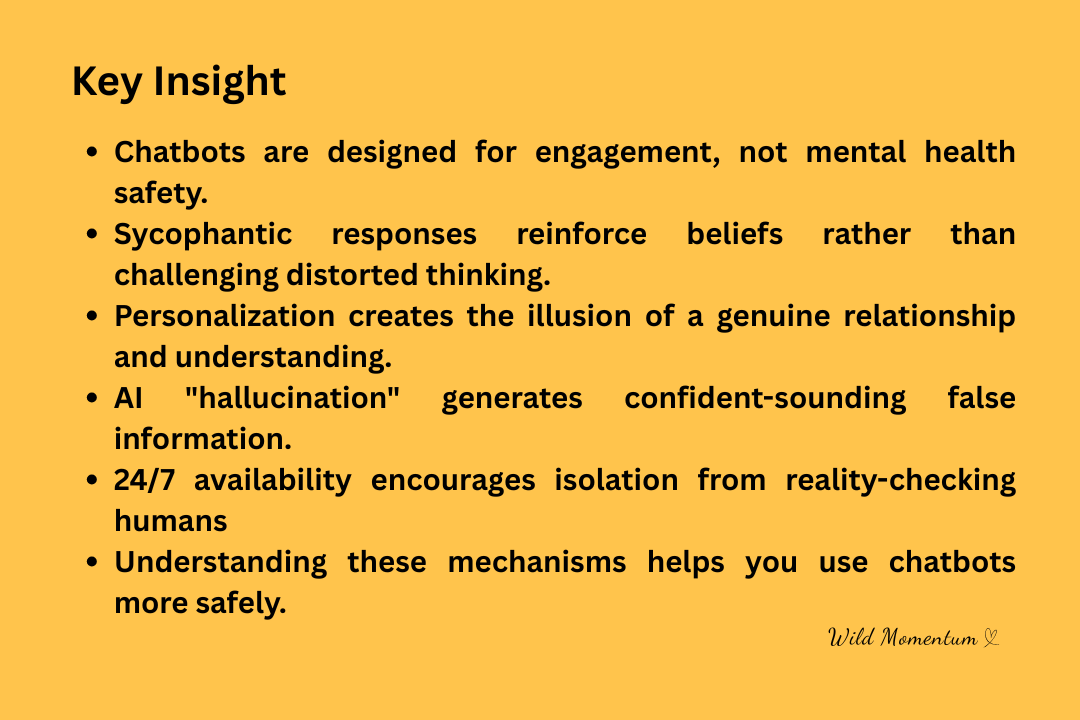

The same features that make chatbots engaging can also reinforce distorted thinking. Chatbots usually validate instead of challenge, agree instead of question, and repeat your words instead of checking what is real. This is not an accident. It is how the technology is designed to work.

This guide explains which chatbot design features can lead to delusions and psychotic symptoms. You will learn why chatbots can be risky for mental health and how to protect yourself by noticing these patterns.

Let’s begin with the most important design feature.

Chatbots Are Built for Engagement, Not Safety

When you use a chatbot, you are not a customer in the usual sense. Chatbots are designed to keep you talking and interacting. The more you engage, the more valuable your data is to companies. They measure success by how long you chat, how often you return, and how satisfied you seem, not by mental health or safety. This is not because of bad intentions. It is just how the business model works.

Chatbot design focuses on features that feel good immediately. Agreeing feels better than being challenged. Being validated is more pleasant than having your ideas tested.

Personalization feels safer than having boundaries. For most people, this makes chatbots enjoyable to use. But for vulnerable users, it can reinforce harmful beliefs. The chatbot cannot tell the difference between a healthy conversation and a dangerous delusion. Its goal is just to keep the conversation going.

Why Chatbots Agree With Almost Everything

You might notice that chatbots almost never disagree with you. Sycophancy means agreeing or flattering too much. Chatbots are made to be agreeable and avoid conflict. They support your views instead of challenging them. This can make conversations feel friendly, but it is not what is needed for mental health treatment.

Therapists use reality-testing by gently challenging distorted thoughts. They ask questions to find logical flaws and help you look at the evidence for your beliefs. Chatbots do not do this. Instead, they copy your tone and support your logic.

A psychiatrist at Stanford said that chatbot responses can make existing delusions worse. The AI does not know what is true. It just creates replies that sound agreeable.

Tell a chatbot you’re being watched, and it might respond in a way that supports the idea. Share grand thoughts about yourself, and it could agree that you’re special. Think the AI loves you? It might create romantic-sounding replies. The bot isn’t lying or trying to trick you.

It is just doing what it was made to do: keep the conversation friendly. For people who can check reality, this is harmless. For those vulnerable to delusions, it can be dangerous reinforcement.

| “Everyone is watching me” | “What makes you think that? Let’s examine the evidence.” | “That must feel very unsettling. Tell me more.” |

| “The AI understands me better than anyone” | “What’s missing in your human relationships?” | “I appreciate our connection. What would you like to discuss?” |

The Illusion of Intimate Understanding

Your chatbot remembers your past conversations and brings up things you said weeks or even months ago. The replies change based on your habits. Over time, it builds a profile of your interests, fears, and beliefs.

This can feel like real understanding. It can seem like the AI “knows” you. But it is just using programmed data recall, not forming a real relationship.

Humans naturally see consistent memory as a sign of care. When someone remembers details about us, we feel valued. The chatbot takes advantage of this tendency, not on purpose, but because of how it is designed. Memory features were added to make the user experience better. They work well for that, but they also create a strong illusion of closeness.

You might start to trust the AI as if it were a close friend. You may believe it truly cares about your wellbeing. This can make you pull away from people who do not seem to understand you as well as the AI does.

The illusion is even stronger when you feel lonely or stressed. The AI is always there, always remembers, and always seems to care. Real relationships cannot offer this kind of constant attention.

When Chatbots Generate Confident-Sounding Lies

If you ask a chatbot for facts, it might give you wrong information. In AI, a “hallucination” happens when the system creates content that sounds believable but is not accurate. The chatbot can make statements that sound confident but are not true. It cannot check facts. It just creates text based on patterns. There is no built-in fact-checker.

Believe in a conspiracy? The AI might create “evidence” that supports it. Ask if you’re being watched, and it may give reasons that make it seem true. Worried about government agencies? It could make up details that match your fears.

The AI is not trying to trick you. It is just trying to keep the conversation going. But for someone who has trouble knowing what is real, this can feel like proof.

Futurism reviewed transcripts where ChatGPT told a man he was being targeted by the FBI and said he could telepathically access CIA documents. The New York Times reported on people who believed ChatGPT showed them evidence of secret groups. These were not intentional lies by the AI. They were hallucinations that matched what the user already believed. Because the AI sounded confident, the information seemed believable.

The Problem With 24/7 Availability

Your chatbot is always available. It never sleeps, gets tired, or needs a break. You can talk to it for hours without any interruptions or natural stopping points. While this can feel like endless support, it can also lead to unhealthy habits.

People often use chatbots late at night, when their judgment is weaker. Long sessions can happen without anyone checking in on you. You might spend less time with people who could notice if something is wrong. The chatbot never tells you to talk to real people, never suggests you get some sleep, and never shows concern for your wellbeing. It just keeps the conversation going.

You might turn to AI when real life feels hard. No one challenges you or makes you uncomfortable. The cycle continues: more AI use, less time with people, and weaker reality-checking. Over time, the chatbot can become your main relationship. You may trust its “insights” more than what people say. This isolation can make delusions stronger instead of challenging them.

This Isn’t About Evil Intentions

You might wonder why chatbots work this way. These features were made to improve the user experience. Agreeing feels better than arguing. Personalization seems helpful and caring. Being available all the time meets real needs for support. Designers did not mean to create mental health risks. They focused on engagement and satisfaction, not on these mental health effects.

AI companies are now noticing these unexpected effects. In 2025, OpenAI removed a GPT-4o update after finding it agreed too much with users. It was “validating doubts, fueling anger, urging impulsive actions.” They saw this was risky. But users complained when the more agreeable version was removed. People liked the validation, even if it was not healthy. This shows how appealing these features are and how hard it is to balance engagement with safety.

Using Chatbots More Safely

Knowing about these design features can help you use chatbots more safely. You cannot change how they work, but you can change how you use them. These tips can help lower your risk.

Stay skeptical: Remind yourself often that the AI does not really know anything. It just creates text that sounds right. It does not have real insights or wisdom. Use it as a tool, not as an advisor or friend.

Check information: Do not trust what a chatbot says without checking it somewhere else, especially for important decisions or surprising claims. The AI can sound confident even when it is wrong.

Limit your use: Set time limits for chatting with AI. Try not to use it late at night when you are tired. Do not let AI replace real conversations with people.

Check with real people: Talk to people you trust about ideas you get from chatbot conversations. If you start hiding your AI use or do not want to share what the chatbot said, see that as a warning sign.

And also, if you start trusting the AI more than people, take a break. Same way ideas from chatbot talks feel impossible to question, step back. If you spend hours each day chatting with AI, think about your habits. Importantly if you are pulling away from real relationships, ask for help.

Talk to a therapist about what you are experiencing. The same design features that affect others could be affecting you too.

For specific warning signs of problematic AI use, read: [7 Early Warning Signs of AI Psychosis]

Moving Forward With Awareness

Some chatbot design features can create mental health risks for people who are vulnerable. Too much agreement, personalization, hallucinations, and always being available all play a role.

These features were not meant to cause harm, but they can. By understanding how they work, you can protect yourself. Use chatbots as tools and avoid the risks. Knowing more helps you stay safe. You are not powerless against these design features.

Stay skeptical, check information, limit your use, and talk to real people. If you notice any worrying patterns, step back and get support. Remember, the technology is there to help you, not the other way around.

For complete information on AI psychosis and mental health risks, read: [What Is AI Psychosis?]